Table of Contents

Get in touch with our team

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

5 surprising items the Smart Item Picker can pick (and how)

Table of Contents

You’ve probably noticed how far item pickers have come these days. From picking random fruits off conveyor belts to handling those traditionally ‘hard-to-pick’ items, their abilities now stretch far beyond simple, uniform items.

Thanks to AI vision technology and over a decade in the pick and place industry, our Smart Item Picker has evolved well beyond its early days. It can now reliably handle some of the most challenging products out there.

In this article, we’ll explore five surprising product types our robots can pick, along with real-world examples and insights into how it all works.

1. Soft and delicate clothing items

Loose clothing is known for being notoriously difficult for item pickers to handle. Clothing items are often delicate and can easily get sucked into the gripper if it relies on vacuum suction.

To avoid damage and make picking more reliable, garments are usually packed in thin plastic bags or bubble wrap. This protects the clothing item and gives it a more defined shape, making it easier to handle.

To handle clothes wrapped in plastic bags, we developed an algorithm that assesses the surface area of an item to identify spots with the highest probability of a successful pick.

The algorithm randomly samples different areas on the surface of the clothing item and assigns each one a score. The winning score is based on three key factors:

- Surface flatness under the suction cup

- Proximity to the item’s center

- Available space for multiple suction cups

Areas with plastic wrinkles (which can break the vacuum seal) are automatically ruled out, while flatter regions are prioritized. If the item has carton labels, the algorithm gives preference to those areas as well, as they provide more stable suction points.

Once the optimal pick point is selected, our motion planning system generates precise approach and pick trajectories designed to minimize swing and reduce the risk of dropping the item. Combined with our fashion-specific suction cups, this approach ensures a secure grip and gentle handling, keeping each garment stable from pick to place.

2. Shiny, transparent packaging

Vision systems have a hard time fully “seeing” packaging that is clear or reflective. When edges aren’t clearly visible, it becomes difficult to identify reliable grasp points. This type of packaging is common for products like pens, toothbrushes, razors, and batteries.

While our models are trained to handle a large variety of challenging scenes, good lighting still remains essential for capturing high-quality data and maximizing detection performance in real time.

To further increase our robustness against challenging light conditions and reduce the dependency on the quality of the camera data, we are developing a new depth data processing pipeline that includes a deep learning model to improve the overall quality of the data without using extra hardware.

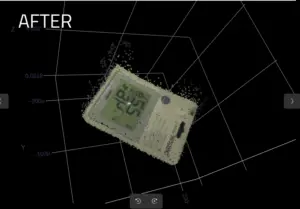

The pictures below show the potential of this new pipeline:

The picture above shows the depth data without the new process pipeline, containing many holes and noise.

After passing the data through our new deep learning pipeline, the output dramatically improved, producing smoother surfaces that more closely match the real item.

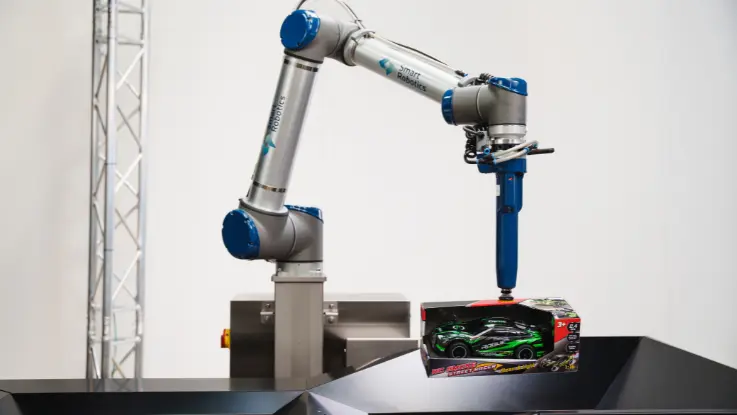

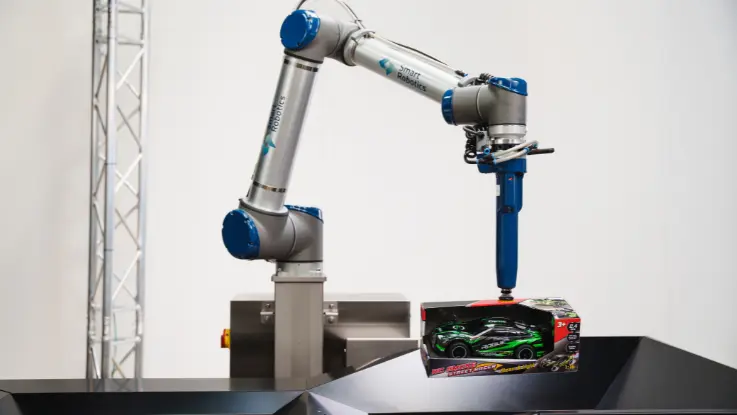

3. Oddly shaped & colorful toys

Picking up toys is challenging for robotic item pickers, as you might expect. Toys can be made of any material, come in any shape, and be of any size. Usually, that means no two items look the same on a conveyor belt or in a tote.

Packaging makes things even trickier. Light can bounce off colorful boxes, making it harder for the system to detect where to pick.

With AI vision and machine learning, the Smart Item Picker can adapt its grip on the fly to handle this wide variety. Lighting is, however, a critical factor here, as it directly affects the quality of the data used for calculations.

To improve item detection, our models are trained on a large variety of items and environments, allowing them to handle challenging scenes where lighting or data may be imperfect. As a result, we can often detect items even when reflections partially obscure in their centers or edges.

This adaptability is especially useful for toy makers, wholesalers, and e-commerce warehouses, where quick and accurate picking is crucial during busy times like the holidays.

4. Protective gaming cases

Even something as simple and straightforward as a sturdy gaming case can be hard for robots to pick. These cases are often covered in shiny cardboard sleeves that reflect light, making it hard for vision technology to ‘see’ where it can grab them.

If these cases are in totes where it’s cluttered or there are a lot of overlapping products, then it’s even harder from a vision and task-planning perspective. The system has to carefully plan how to approach and lift the item without hitting surrounding objects, which requires precise motion planning.

To tackle this, the Smart Item Picker uses deep learning models that are trained to recognize objects in shiny, reflective packaging. Just like with oddly shaped toys discussed above in the article, good lighting plays a key role here. While the system is trained to handle tricky scenes, having proper lighting helps it capture clean, high-quality data – making it much easier to find reliable grasp points, even in challenging conditions.

5. Lotion, shampoo and beauty products

Beauty products such as makeup come in many different shapes, sizes, and packaging types. This makes sense considering they’re designed to appeal to a certain type of target audience. However, these variations may make it challenging for item pickers to do their job well.

For cosmetics and beauty products, we use the same algorithm described in the “soft and delicate clothing items” section. In short, the algorithm simulates how well the suction cup would stick to different parts of an item and rules out areas where the grip wouldn’t be secure, such as round edges or uneven surfaces.

This approach allows the Smart Item Picker to consistently pick oddly shaped items at the ideal location (for example, picking at the center of the beauty product below).

This is especially important in the beauty and personal care industry, where large volumes of different-sized and shaped items are sold. Logistics teams in this industry can then rely on the Smart Item Picker to consistently make successful picks.

How does vision work in item picking

To learn how item pickers decide what to pick and how to pick items, watch this video:

In short, the video shows how our AI agent uses both 2D and 3D data to make decisions based on the highest chance of success. You will see in the video that the largest and highest item in the tote was picked first.

The 3D color map then guides the robot’s pick order:

1. Green – pick first!

2. Yellow

3. Orange

4. Red

After each pick, a new image is captured to prepare for the next.

In short

Automating item picking can feel like a big risk for many logistics and e-commerce businesses. It’s natural to wonder: “Can it really handle all our different products?” or “What happens if something can’t be picked?”

As we’ve seen in this article, modern AI vision, task planning, and motion planning have come a long way. An important concept behind this advanced technology is that it is not gripper dependent. This means that if a customer requires a special gripper, we can still deliver.

By combining deep learning models that identify the best pick points to evenly distribute weight for gentle handling, along with advanced depth data processing to pick even the trickiest items, the Smart Item Picker can now handle a wide range of products – from soft clothing to shiny, reflective packaging.

For logistics teams, that means faster operations with little to no manual intervention. With technology like this, “hard-to-pick” items are quickly becoming a thing of the past.

Get in touch with our team

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Curious if the Smart Item Picker can handle your products?